So what’s this about?

It’s simple enough in theory – I’m a great devourer of paperback fiction – mainly sci-fi and fantasy – and I often re-read books.

So I have a hoard of thousands of paperbacks. And I have two problems with this. The first is that I’ve been unable to get them out for nearly 10 years, because there is simply no space for them (my wife foolishly prefers to allocate the space to children’s bedrooms, kitchens and the like). The second and in some ways bigger issue is that they are gently disintegrating. Some of them have spent 15 or 20 years of their lives in one garage or cellar or another.

To make matters worse, I’m asthmatic and have breathing troubles reading musty old books, so even if I could find (or build) a home for the, I can’t actually read them anymore!

So I hit upon the idea of scanning them and converting them to eBooks. The trigger for this was actually the release of the iPad. I’d played with the existing eReaders (Kindle, Sony and others), but i didn’t get on with them. I found the page switch with eInk took just too long and the flickering as it changed was distracting.

Another factor becoming increasingly important is the small print of many of my books. My eyes just aren’t what they were and the idea that you could change the font size (upwards) was very appealing!

So I set off to work out how to do this. This story details some of the experiences I had along the way and I hope will serve to provide some insights into the issues and realities of such a task!

‘Design Considerations’

My business is writing software for people. So I embarked on this much as I would any other project by working out where the risks were and what was necessary for the whole thing to work.

My time is precious

Well I earn a freelance living by planning, designing, architecting and producing commercial software. Every minute I’m not working is a minute I’m not earning. Well more or less – I do have a life too! But however I did it, each book must take a minimal amount of my time to handle and ideally be the sort of time which is not distracting from my day job.

There’s a lot of them

I don’t exactly know how many books I’ve got. 10 years ago I had 5 or 6 thousand and I will have added a thousand or so since then. But then I know that some have been destroyed by falling plaster and others have been nibbled at by rats. And I don’t know how many of the remaining will survive my garage!

But I reckon that I’ve 5,000 or more. That’s at least 1.5 million pages.

I don’t mind some errors, but…

I don’t expect the output to be identical to the original book. If the odd character gets messed up then I’ll probably not notice. But, the basic reading experience should be good.

It won’t work right

If there’s one thing I know from a lot of experience with software development, whatever I build won’t work right all the time and I must be in a position to re-do/re-run books later on.

Added Value would be nice

The main thrust is to be able to read my books again, but there are things which would be nice to have. For example, I find myself either buying the second book of a series twice or deciding not to buy a book because (I think!) I have it. Sometimes I do. Sometimes I don’t. And very occasionally when I do, I can’t find it!

The last time my books were all accessible (and in alphabetical order) was ten years ago, but even then I could buy books that I already had on my shelves.

How I decided to make it work

The first thing I tried was using the stock software which came with my HP multifunction printer/fax/scanner. I was actually very impressed with the quality of the conversion and decided at that point that this could be made to work! Of course there’s many a slip ‘twixt proof of concept and production!

I looked at the costs of getting this done professionally. I gave this up VERY quickly. I was getting estimates of around 10 pence a page, which would mean a minimum cost of £150,000. I could buy a library for them for that!

Next I posted some questions on related forums and asked some questions of manufacturers. www.mobileread.com was particularly helpful.

I decided early on that I would have to chop the books up. At this point there will be cries of horror from all bibliophiles! No! You can’t destroy books!

But remember, that most of these books had gone past the point of readability (by me anyway). Think of it as ascending to an electronic heaven, rather than being consigned to a council dump helll!

Also, there is no way whatsoever that I had the time to scan these on a flatbed or money to buy a non-destructive book scanner. For those who are interested there is a site dedicated to home made book scanners (http://www.diybookscanner.org/), but for me it was impractical.

So my process consists of:-

- Guillotine the spine off the book

- Count the pages

- Register the book details (via an ISBN lookup service) in a database

- Scan the book in chunks through an document feeding scanner to a tiff file

- Check all pages are there and redo any misfeeds

- Pass the tiff files to an OCR program and generate word and text files.

- Transform the word file to ePub files

- Add the text to the database

That’s the outline of the process. If you’re just interested in passing, this is a good place to stop. The next little bit goes into the details of the process and the lessons learnt. If you’re thinking of doing something like this yourself, it is well worth reading on!

Preparation

Cutting the pages free

One thing which is definite about this process: Garbage In Garbage Out. The quality of the end product (and the user time taken) depends very strongly on how well you prepare the books for scanning.

I first tried to cut the spines off the book with a sharp knife, but two things became obvious immediately. Firstly it would take an age to do this – several minutes a book. Secondly, it was very hard to do this without tearing the paper which could lead to jams or tears in the written page, making OCR less reliable.

I’ve heard that some people use a bandsaw, but this creates a lot of paper dust (which would half kill me) that clogs up the scanner. So I ended up with a guillotine like the one on the right. This does a great job of cutting the spines off, but there are some gotchas. This cost around £180 including delivery.

The more equally sized and regular the cut pages are the better. The OCR does very well at de-skewing the pages if they are at an angle, but whilst it still gets the letters right it can mistake the formatting. For example a strongly slanted page might have part of it processed as right justified rather than fully justified.

Cut too much off and you risk losing some letters. That’s really bad! But too little off and you end up with the pages still stuck together. For the main part the glue seems to seep further into the pages at the start and end of the book and less so than in the middle. It is worth riffling the pages to see if any are obviously stuck. If they are use a pair of scissors or the guillotine to trim them. Check the first and last pages particularly, but the glue seepage can happen anywhere.

If the book is thick then you must first split it in two (or more). This requires great care and a sharp knife. It’s easy to tear the pages.

I’ve still some work to do on the guillotine. The paper guide (black in the picture above) is free to move in all directions (until clamped), so it’s hard to force it to a right angle. It’s also not easy to estimate how much of the spine you’re cutting off until you’ve clamped it.

Most books (at least mine) have the spine bent, especially the larger ones. That means a certain amount of fiddling around trying to get the book as straight as possible before clamping and cutting. It’s best to make sure the front page is at the top. If it is at the bottom, you are in danger of cutting off too much of it. Not that this will affect the processing, but the front cover looks best as complete as possible!

One of the annoyances is that all paperbacks seem to vary slightly in size. So you can’t just set the guide and slice. The width may vary by 1/4” inch and more which can lead to trimming too much or too little off. I would like to find someway of inserting/clamping the books so you are trimming an exact amount (rather than leaving an exact amount), but I’ve not worked out how to do that (without major engineering on the guillotine!).

I’m currently estimating that the time per book for this process is around a minute. Rather less if you have a nice set of thin paperbacks (I have a set of Hammond Innes, I’m looking forward to processing). Rather more if you have a collection of thick books in slightly difference sizes.

Scanning

Fairly early on I realised that the off-the-shelf packages (and particularly the ones which came with the scanner) just weren’t going to be good enough. I wanted to be able to

- Record details of the scan

- Add in book information (Author, Title)

- Register the book/scan in a database (partly to prevent duplicate scans, mainly for longer term book searching and so on)

- Fix the scanned images if there were problems

- Scan multiple hoppers of pages into one file

- Mix formats (colour for Cover pages, monochrome for content)

- Have minimal human interaction during the scanning of a book

This ended up with me writing a custom scanning program.

This was not a straightforward task. There are at least 3 ways of talking to scanners and both of the free ways (TWAIN, WIA) are documented by Microsoft, which is to say, ‘not very well’. The ISIS driver model is probably better supported, but also quite costly. I ended up settling for WIA (Windows Image Acquisition) as the best combination of free and modern. I’ve used TWAIN based software in the past and it always struck me as clunky.

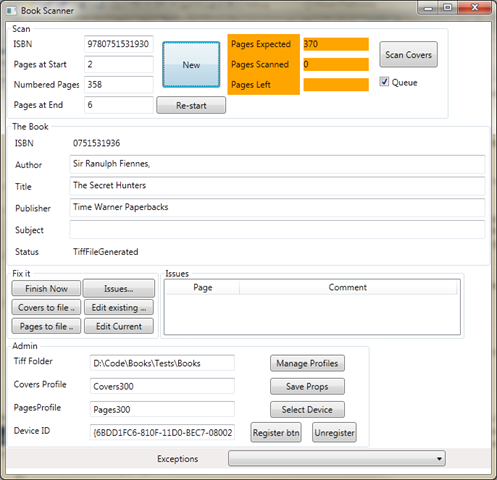

Scanning Program

The scanning program (shown above) does this

- In Scan, you enter the ISBN (or scan the barcode with a handheld scanner)

- The title and author are retrieved with a service from isbndb.com and you may enter the ‘subject’.

- You enter the number of pages. Actually I put in the number before the first numbered page and the number after the last numbered page. Saves me adding them together. I had in mind to automatically work out which book page a scanned page was for repair purposes, but I haven’t really used it.

- You click New and you can then start the scanning process. New will store a book record in the database.

- First you put in the front and end pages which are scanned in colour

- When this is done, you put in the succeeding pages (in bunches of about 50-60 sides) and they are scanned in monochrome

- When all the pages are done (and if the count of pages scanned match the expected), the images are written to a compressed tiff file and this is moved to a queue directory.

- If there are issues during the process (paper jams, mis-feeds and so on), you can rescan some of the pages and or edit the resulting tiff file using the tools in the Fix it section. Also, you can record which pages the issue occurs with the Issues … button.

The scanning and tiff generation part is written in C++ and called (as a COM/ActiveX dll) from a WPF c# program which does the rest of the stuff.

In theory, with WIA you can set up ‘Profiles’ which provide a configuration with a UI to set it. I thought this would be ideal – you could pick a resolution, page size and so on without having to explicitly set this up in code (making the application scanner specific). However, I found that the profiles only seem to save some of the properties and that they had to be loaded explicitly in any case. In the case of my scanner (Fujistu fi-6130) it saved the horizontal resolution but not the vertical resolution, leading to large files with stretched out and hard to process characters. So I ended up adding some properties (e.g. vertical resolution) to the profile by hand. This now works in terms of configuring the scanner in my program but prevents the profiles loading in any other software! If I have the energy I will look at this again some time.

The scanner program is attached to a hot key so that I can do ‘next scan’ with a single keystroke without the program being on top.

Tiff Editor

The Tiff editor (c#/WPF) is optimised for scanning through pages of the book, deleting ranges, inserting ranges and so on. By setting the Offset (the index of the page which is numbered ‘1’ in the book) the Page number should match that of the page onscreen and you can quickly jump through the book and see where pages are missing. So if you have noted that pages 17 and 18 were misfed then you can navigate there, Delete 2 pages and insert a scan of these 2 sides you do with the ‘PagesToFile’ button in the main program. It would be nice to have this more strongly linked, but it’s not worth doing it at the moment.

The one thing I’ve not done (yet) is to allow it to reverse the order of some pages. I’ve only had this happen once so far and simply rescanned the pages in the right order.

I was particularly keen to keep the Tiff files so that I can reprocess them later if I find program or operational issues, if there is a new and better OCR program or if I find a major corruption whilst reading and want to refer to the original. However, even compressed (LZW) these files run to between 500MB and over 2GB. I’m not too troubled about the size per se – Terabytes are cheap these days and I’ve currently got a few to spare, However, saving a 2GB tiff file (which involves recompressing all the individual images) is slow. So in the editor and the Scanner, I’ve put the copy of the final tiff to the processing directory on a separate thread.

Actually Scanning

After some research I settled on a Fujitsu fi-6130 scanner, which cost me around £500 and was the highest outlay of the project. I chose this in preference to the lower end Fujitsu range because it seemed to me to be aimed at higher volumes and 1.5 million page probably qualifies. Having said that, it may be cheaper to throw a couple of SnapScans away… The online reviews for Fujitsu scanners were also considerably more positive than all others in this price range.

I would have liked a hopper big enough to take a whole book at once. But that’s 6 grand and WELL over budget. I’m also not entirely sure how well an scanner will handle 600 paperback pages at once and what would happen if it started to screw up on page 10! The Fujitsu manages around 50 pages (25 sides) at once. But beware of the specifications for these devices. Capacity of 50 pages means 25 sides!

I am delighted with the quality of the scanner. In particular the reliability of the page feeding is extremely good (actually far better than I would have believe possible). Provided that no pages are glued it handles most books without a misfeed, jam or double feed. My page number checking has revealed more defects in my counting skills than in double feeds through the scanner.

I’ve seen three classes of problems with the scanner.

- Glued pages. This is more likely to result in a page being pulled through sideways than a double feed, though this does happen. After a while you get a feel for glued pages as you try and riffle them slightly. A stiffness is how I would describe it. But watch out, you can get into all sorts of messes here, even to the extent of one of the pages tearing as it is pulled through. The one time this happened, I was able to smooth the page and feed it through again. On my todo (but probably won’t) list is to link this up (somehow) to my flatbed so badly damaged pages can be put through gently.

- Tilted pages. If a page is tilted in the hopper it is more likely to misfeed, pulling itself through at an angle or even tearing. This can be minimised in two ways. First, try and keep the cut pages rectangular. If you cut on an angle (even a slight one) this is much more likely to happen. This is harder than you might think since the book can skew through it’s height and it can be hard to get the spine dead straight (see my comments on Preparation above). The other thing is to make sure that the page guides are tapped tight every time you put a batch of paper in. With the best will in the world, even if your edges are straight, it’s almost impossible to get the spine straight with thicker books, resulting in wider (or narrower) pages as you go down through the cut. A gentle tap each time you put a batch in really helps here.

- Upside down feeds. I don’t understand this, exactly, but with some books (and it is certain books), the pages will end up feeding in sort of upside down. I think what happens is that the bottom of the page sticks on the guide and the roller pulls the rest through, effectively inverting the page. the books this happens on have pages which seem to lean forward in the hopper. I suspect they are thinner than most or more flexible or something. When this happens once with a book it is likely to happen more times. I have no resolution except, perhaps, when I see pages leaning forward to put fewer in. This may reduce the chance of it happening or at least mitigate the damage! In desperate straights you can always feed the pages one by one…

the specs for the scanner say no more than 50 or 60 pages (sides really). If you have a problem book (any issues at all) then feed in fewer (20 pages/40 sides, perhaps). In general I don’t worry too much. I find myself putting 60 or 70 pages in sometimes, but only when I think the book quality can take it. I had a lot of problems in the early days trying to stuff as much in the hopper as would physically fit and it caused no end of problems.

Also, I don’t generally riffle the pages as recommended (despite what I said in the ‘glued pages’ section above!). I do bend them a little both as I pick them up and as I put them in the hopper. Riffling takes a much longer time (and releases more paper dust into my lungs) and I reserve that for books which seem suspect.

The scanner (at 300dpi resolution) scans about one or two pages per second (in monochrome), so a 300 page book takes 4 or 5 minutes to scan in, in about with about 8 hopper loads. The system as it stands is sufficiently smooth that you can loading the hopper doesn’t distract from work.

Converting

FineReader OCR

This, of course is where the magic happens. I looked at both FineReader 10 and Omnipage 17. In the end I plumped for FineReader. In the tests I did, I found that FineReader just did better. The particular thing which swayed me was that OmniPage would sometimes get the boldness wrong. So I would have a page which was perfect and the next page (or part thereof) would be in bold, though the original was not. These seemed to happen quite a lot, whereas FineReader was much better.

It’s hard to estimate the error rate for the OCR. Apart from anything else, I’m a big picture sort of person, and not a good proofreader. However I would estimate the error rate for character recognition in the errors per 10,000 characters range. Which is a damned sight better than my typing!

Where things fall apart a little is with images in the book. FineReader tends to be a little over-enthusiastic in extracting text from these images. So things like a map may have parts of the image excised with the text placed as text nearby. To be fair, if you process items by hand then you can pick areas to be text or image or tables, overriding the FineReader options. This also means that blank pages are taken to be images so you end up with lots of pictures of empty pages.

Also, it doesn’t find chapter points quite as I would like. It often finds some, but not all.

Don’t get me wrong, I’m delighted with the quality of the output. Again, it is better than I would have believed possible before I started this project. It even usually gets the detailed formatting (bold, italic, font type and size) right.

My main gripe with this version of the product is that the Word 2007 files it produces work fine in word, but don’t conform to the strict Open XML standard, which means that i have to convert them (through word) before I can process it. This adds an extra step and requires Word 2007 on the processing machine and is a right pain.

The other complication with FineReader (the cheap version!) is that there are few automation facilities. The (much) more expensive Corporate version will do folder watching, but I’m not sure it will produce several formats from one file drop. In the end I used AutoHotKey to drive FineReader which works well enough, but can be a bit fragile. Specifically, if you are messing around in the UI when AutoHotKey issues a command (e.g. File/Save As/Text…), then the operation won’t necessarily work. Fortunately, I’ve a spare quadcore machine which I’ve allocated to this purpose. This system would not work well if I had to run it on my main machine whilst working. However, there is no reason the conversion can’t take place separately to the scanning – you could set this running overnight, for example.

FineReader cost me around £75.

Output Formats

The format the iPad takes is ePub, which is among the more popular and less proprietary formats. When I started this project I assumed that the final conversion from one of the FineReader output formats (Word, HTML, Text, PDF) would be straightforward. Not so (you probably guessed this was coming!). There were a number of problems with the output.

Firstly, the images issue (described above) meant a perfectly good eBook would have lots of pictures of empty pages and partial images. This is irritating.

Secondly, Depending on the approach, you would end up with images cropped in strange ways (no I have no idea why – I think that was the FineReader->Html->Calibre route).

Worst of all was the way in which the Word Styles were produced.

In fact there are a number of levels of formatting which can be included in the output. The two I considered were Editable and Formatted. Basically, Editable tries to keep the pagination right, and includes more layout information whereas Formatted sticks mainly with character level formatting (I may be wrong about this in detail, since it is not described anywhere except in overview). I chose Editable, because I felt that the additional information would be helpful – particularly pagination, mainly so I could improve chapter identification and so on. I’m not 100% sure this was the right choice in hindsight, but there we are.

The issue (with both formats) is that each paragraph had explicit formatting information in (for things like left and right margins, first line indent and paragraph and line spacing. The Editable format was particularly bad with this, since it had absolute line spacing in every paragraph, leading to a mess in an eBook reader if you tried to change font size (or if the reader did it for you). Also, each paragraph would have the margins adjusted by half a point here or a point there. Overkill, for most situations, I think.

I’ve only found one tool to convert directly from the word format (Aspose), and that resulted in large ePub files with complex html formatting in every paragraph. Probably there was more style content then text! And we had the fixed line spacing issue and images.

So I bit the bullet and decided to write my own OpenXML / Word to ePub convertor.

The main objectives of this were

- To put styles in stylesheets as far as possible

- To minimise the number of styles actually used.

- To tidy up the image issue – no blank images in the resulting ePub!

- To split the ePub into sensible chapters.

The first thing I did was to remove the start end end pages from the tiff files sent to the FineReader and add the front page in as a cover in the ePub section. FineReader’s enthusiasm for conversion was simply too strong!

Open Xml (2.0) is a format which is marvellously well documented at a low level, but it’s hard to work out how the bits fit together. Also most of the samples deal with generating documents and not with reading Word documents. Word documents (not unreasonably) use Open XML in a complicated and sophisticated way and the standard as a whole is vast and complicated (again not unreasonably). So for now I’ve built something which seems to work quite well and have a means to log any issues. The main things I’ve not bothered with so far are

- Not all the styles properties are implemented (e.g. Borders and shading)

- Frames (floating text or graphics) – I don’t think paperbacks novels need these!

- Images. I’ve had a stab at eliminating largely blank images, but there’s a limit to how well this will work. I’ve also treated all images as inline and ignored any positioning or formatting. I’m hoping that the images in my books will remain simple.

- Tables. Not done any work on these at all. These mainly seem to happen with publisher information rather than real text, though I’m fairly sure I’ve got some books which do have tabular data in.

- Chapters. Well, FineReader has a bash at noticing changes and marks them with a bookmark. There’s some chapter changes which is misses, but for the main part you will get some kind of divisions. So I’ve just used the FineReader splits for now which improves the load times and adds some value to the formatting. I will be cleverer later if I need to.

The Database

All of this stuff has been pushed into a database of course (including the text of each book). For now it’s kind of write only, but once I have a few more books in I will make it a bit more useful. Searching for books (including free text), compiling series … All that sort of thing. But for now it will remain simple.

Summary

Yes you can digitise your own books.

And you can do it with fairly minimal time overhead.

However, you certainly have to be prepared to discard the books afterwards!

Whilst I’ve been writing this piece I’ve been digitising (books I’d already cut the spines off). I’ve done 10 and they’ve mainly been biggish books, so that’s around 5,000 pages. I’ve not had a single misfeed, though I’ve typed in the number of pages wrongly twice! I’ve had one book with some stuck pages which I spotted before I tried to load it and re-trimmed with a guillotine.

I suppose that means I could get through 20 a day or 100 a week (my 5000 books in one year), but I doubt I could keep up that rate week in week out. What’s going to slow me down are the points in my work where I really really can’t stop. The process of loading and continuing the scan isn’t onerous and doesn’t distract me to speak of, though if I was really focussing on a problem I wouldn’t want to do it.

However, Registering the book details takes a bit more concentration and I would be far less likely to do this whilst doing something complex.

I’ve not lived with this long enough to know how well the eBooks will work out, but early signs are promising.

Now, of course, having spent a good deal of time cobbling together something that works, I’m interested in seeing if I can commercialise it!

So if anyone has ideas on this, or would just like to discuss what my experiences, do drop me an email at IAIN at IDCL dot CO dot UK!